What Are The Challenges Of Interpreting Artificial Intelligence Results Accurately?

Artificial intelligence (AI) has become an integral part of various industries, from healthcare and finance to transportation and education. As AI systems continue to evolve and improve, the need for accurate interpretation of their results has become increasingly important. However, interpreting AI results accurately can be challenging due to several reasons. One of the primary challenges is the lack of transparency in AI decision-making processes. Many AI systems, especially those based on deep learning algorithms, are often referred to as "black boxes" because their internal workings are not well understood, making it difficult to interpret their results.

Challenges in Interpreting AI Results

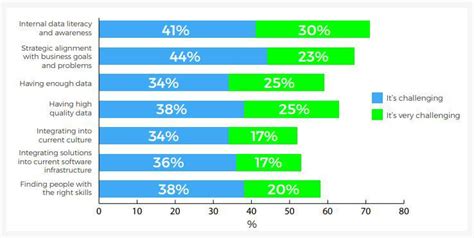

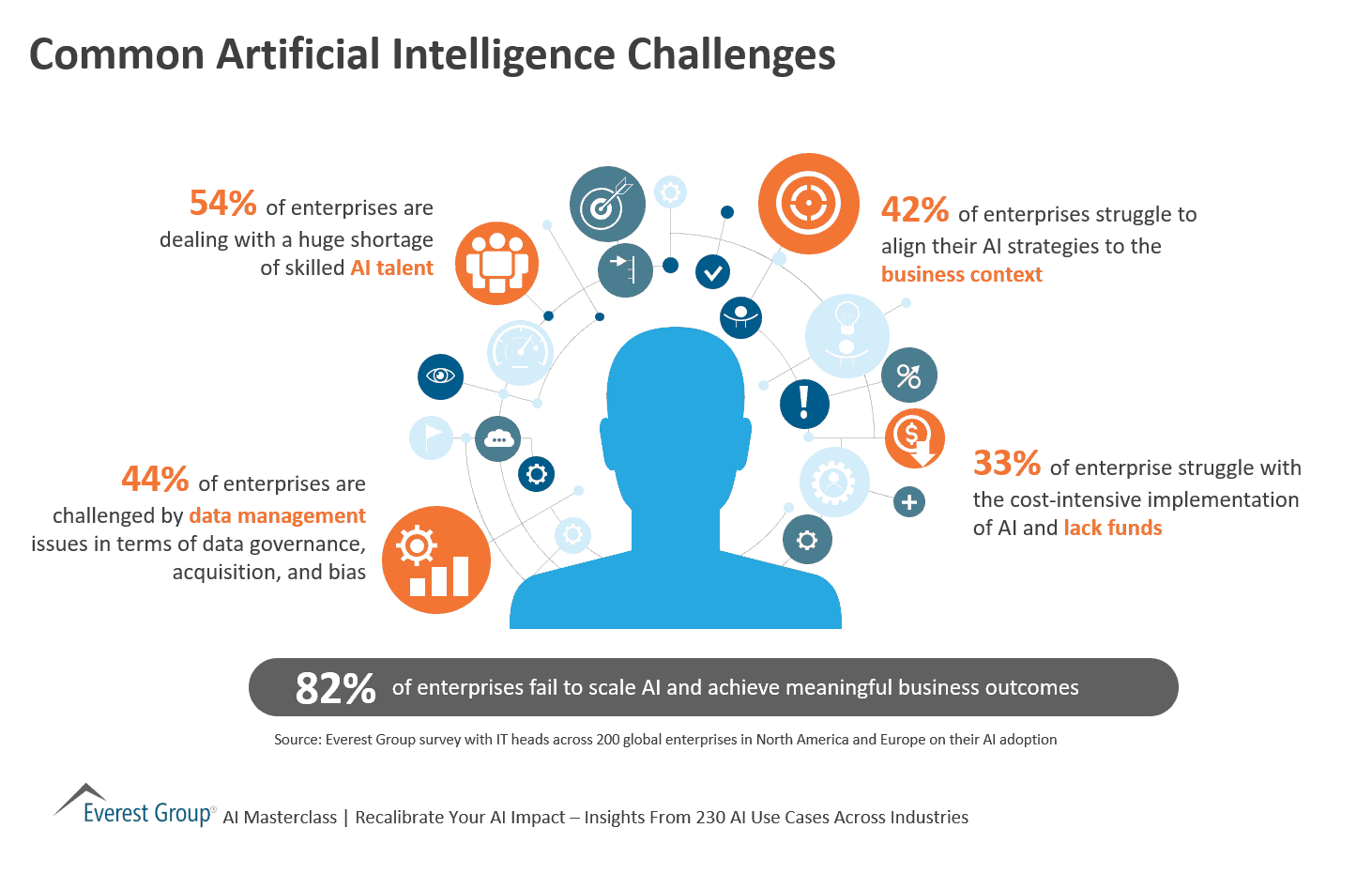

Another significant challenge is the presence of bias in AI systems. Bias can occur due to various factors, including biased training data, algorithms, and human prejudices. If an AI system is biased, its results may not be accurate or reliable, leading to incorrect conclusions and decisions. Furthermore, data quality is also a critical factor in interpreting AI results. If the data used to train an AI system is incomplete, inaccurate, or outdated, the results may not reflect the real-world scenario, leading to incorrect interpretations.

Technical Challenges

From a technical perspective, overfitting and underfitting are two common challenges that can affect the accuracy of AI results. Overfitting occurs when an AI system is too complex and learns the noise in the training data, resulting in poor generalization performance. Underfitting, on the other hand, occurs when an AI system is too simple and fails to capture the underlying patterns in the data. Both overfitting and underfitting can lead to inaccurate results and require careful model selection and hyperparameter tuning to mitigate.

| Challenge | Description |

|---|---|

| Lack of Transparency | Difficulty in understanding AI decision-making processes |

| Bias | Presentation of biased results due to biased training data, algorithms, or human prejudices |

| Data Quality | Impact of incomplete, inaccurate, or outdated data on AI results |

| Overfitting | AI system learning noise in training data, resulting in poor generalization performance |

| Underfitting | AI system failing to capture underlying patterns in data due to simplicity |

Best Practices for Interpreting AI Results

To accurately interpret AI results, it is crucial to follow best practices such as model validation, result verification, and human oversight. Model validation involves evaluating the performance of an AI system using metrics such as accuracy, precision, and recall. Result verification involves checking the results of an AI system for errors or inconsistencies. Human oversight involves having human experts review and validate the results of an AI system to ensure accuracy and reliability.

Future Implications

As AI continues to evolve and become increasingly pervasive, the need for accurate interpretation of AI results will become even more critical. Regulatory frameworks and industry standards will play a crucial role in ensuring the transparency, explainability, and fairness of AI systems. Furthermore, the development of explainable AI techniques and AI ethics will be essential in building trust in AI systems and ensuring that their results are accurate, reliable, and unbiased.

What are the consequences of inaccurate AI results?

+Inaccurate AI results can have severe consequences, including financial losses, damage to reputation, and harm to individuals. For example, inaccurate medical diagnoses or treatment recommendations can lead to adverse health outcomes, while inaccurate financial predictions can result in significant financial losses.

How can AI systems be made more transparent and explainable?

+AI systems can be made more transparent and explainable by using techniques such as model interpretability, feature importance, and bias detection. Additionally, techniques such as attention mechanisms and salience maps can be used to provide insights into the decision-making processes of AI systems.

What is the role of human oversight in interpreting AI results?

+Human oversight plays a critical role in interpreting AI results by providing an additional layer of validation and verification. Human experts can review and validate the results of AI systems to ensure accuracy and reliability, and can also provide context and domain knowledge to help interpret the results.

In conclusion, interpreting AI results accurately is a complex task that requires careful consideration of various challenges and best practices. By developing AI systems that are transparent, explainable, and fair, and by following best practices such as model validation, result verification, and human oversight, we can ensure that AI results are accurate, reliable, and unbiased. As AI continues to evolve and become increasingly pervasive, the need for accurate interpretation of AI results will become even more critical, and regulatory frameworks, industry standards, and explainable AI techniques will play a crucial role in building trust in AI systems.